Related Topics

“Deepfake” is a buzzword of the moment, driven largely by rapid advances in artificial intelligence (AI) technology. Whether it’s through impersonating celebrities, public figures, or even family members, deepfakes are making it easier for criminals to carry out deceptive schemes of all kinds.

What are deepfakes?

Deepfakes are AI-generated videos, photos, and audio that fabricate or alter someone’s likeness—often to a shockingly realistic degree. These heavily manipulated pieces of digital content can make it appear a person has said or done something they never did.

While sometimes used for fun or entertainment, deepfakes are increasingly being used for more malicious purposes—such as misinformation and scams. Deepfake scams pose an especially grave threat to older Americans, who reported fraud losses of $3.4 billion in 2023 (an 11% increase from 2022).1

Deepfake technology is becoming more sophisticated by the day, making it harder to tell what’s real from what’s fake. And it’s causing ripples of uncertainty across the nation. In fact, a poll conducted by YouGov in 2023 found that 60% of Americans are “very concerned” about video and audio deepfakes.2 Understanding what deepfakes are, and how to spot them, can help you make informed decisions and protect yourself and those you care about.

What are some common deepfake scams to look out for?

Below are five of the most common types of deepfake scams:

1. Investment scams

When 82-year-old Steve Beauchamp saw a video of billionaire Elon Musk promoting a high-return investment opportunity, he eagerly hopped on board. Within several weeks, Beauchamp had lost more than $690,000 of his retirement savings to digital scammers, who had used AI tools to create a convincing deepfake of Musk.

Deepfakes can be used to impersonate financial experts or famous investors promoting fake investment opportunities. Once a person invests, the criminals disappear into thin air, leaving a trail of financial devastation in their wake.

2. Romance scams

You’ve probably heard of people meeting someone online and getting caught up in a whirlwind romantic courtship, only to discover their love interest is a con artist. Now, with deepfake technology, scammers can create even more convincing fake personas using face-swapping tools and AI-altered photos. These criminals assume a fake identity, nurture a personal connection with their target, and eventually, ask for money (usually in the form of online payment apps, bitcoin, or gift cards). In Hong Kong, for instance, a romance scam using deepfake technology lured victims into giving more than $46 million to a group of scammers.

3. Political scams

In early 2024, New Hampshire residents received a strange robocall from someone who sounded like President Joe Biden, telling them not to cast their ballot in the state's presidential primary. Turns out, the voice was not Biden’s at all; it was generated using AI. This is one example of how deepfake technology is being used to create bogus videos or audio clips of political figures, potentially spreading dangerous misinformation or manipulating public opinion.

4. Extortion scams

With this type of deepfake scam, scammers use AI technology to mimic the voice or appearance of a family member, claiming they are in urgent trouble.

One particularly devious extortion scam is known as the “grandparent scam,” which typically targets older adults over the phone. Using special voice cloning technology, criminals impersonate a grandchild or other family member. They tell the relative they're in some kind of serious legal trouble and need money quickly. From January 2020 to June 2021, the FBI Internet Crime Complaint Center (IC3) received over 650 reports of possible grandparent scams, resulting in over $13 million in losses.2

5. Celebrity endorsement ad scams

When video ads of Taylor Swift promoting Le Creuset cookware appeared on Facebook in early 2024, fans weren't entirely surprised, since the singer has made her love for the luxury cookware known. However, these ads were fake, fabricated without Swift’s knowledge or permission using a cloned voice and previous video footage. Bogus product ads featuring celebrity deepfakes can prompt people to buy fraudulent or non-existent products, losing money and even sensitive personal data in the process.

How can I identify a deepfake video, photo, or audio clip?

Detecting a deepfake video or image isn’t always easy, but there are some key signs to watch out for.

With videos, look for:

- Unnatural body posture or facial movements (e.g., strange blinking patterns)

- Inconsistent or unnatural placement of reflections (e.g., in eyes and/or glasses) and shadows

- Out-of-sync audio between voice and mouth

- Blurred mouth or chin area

- Excessive pixelation or jaggedness of images

- Discontinuity across the clip (such as a person’s tie color changing inexplicably)

With photos, look for:

- A glossy, artificial, or “too perfect” look

- Features that appear slightly “off” or artificial, such as distorted legs, extra or webbed fingers, or a facial structure that doesn’t look quite right

- Distorted, illegible text and numbers

- Facial skin color that doesn’t match the rest of the body

With audio, look for:

- A robotic or “flat” tone of voice that sounds too perfect or lacks the natural conversational fluctuations of a human speaker

- No background noise, or unnatural sounding background noise

How can I protect myself from a deepfake scam?

In addition to watching out for the signs above, it’s a good idea to practice healthy skepticism and adopt these smart habits:

- Limit the personal information you share online: Avoid sharing too much about yourself on social media platforms. Scammers can weaponize this information to create more convincing deepfakes.

- Confirm identities: If you receive an unexpected call from someone asking for personal information (e.g., your Social Security number) or requesting money, be very cautious. Hang up and call that person directly using a reliable method (such as a phone number from a company’s official website). If this person claims to be a family member, ask them a question only the real person could answer, or hang up and call them back using their known phone number.

- Verify the source: If you see a surprising video or image online, verify its authenticity through reputable news sources or official channels. You can also visit sites like FactCheck.org and PolitiFact, which are independent sites focused on debunking misinformation.

- Pause and think: "Any content you see online that makes you feel a strong emotion - anger, fear, disbelief - is a red flag," said Lynette Owens, VP Global Consumer Education & Marketing at Trend Micro. "Whether it is a deepfake scam or misinformation, there's a moment where you should pause and think before believing and replying right away." If you see a photo or video or you’re talking to a person and something feels "off," it probably is. Instead of giving in to your emotions, take a moment to verify that what you’re seeing or hearing is actually true.

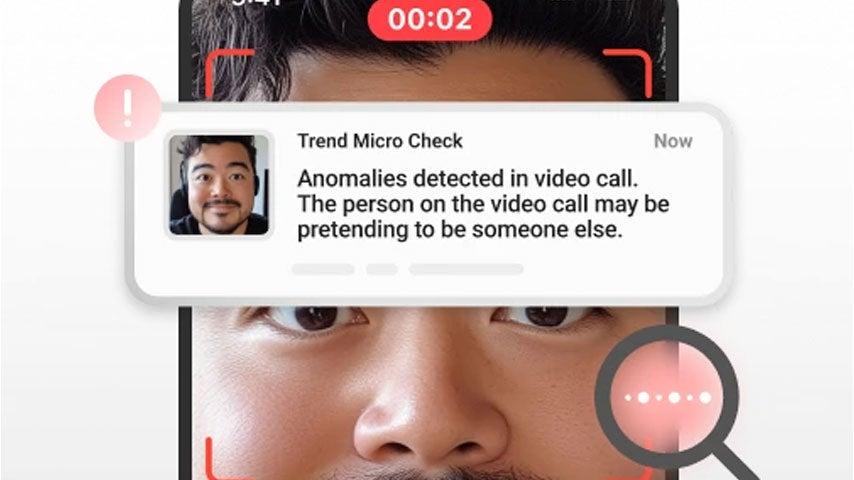

- Use the latest deepfake detection tools: Is there a way to detect deepfakes? Mobile apps like Trend Micro Check from Trend Micro (available for download on the App Store and Google Play) can help you verify if the media you’re looking at is real or manipulated, giving you more confidence as you navigate the digital world.

What should I do if I’ve been targeted by a deepfake scam?

If you believe you’re the target of a scheme or you’ve fallen for a deepfake scam, take immediate action:

- Protect your financial accounts: Notify your banks and credit card companies that you may have been scammed. Change any compromised passwords and enable two-factor authentication to secure your online accounts.

- Contact authorities: Report a suspected deepfake scam to law enforcement or agencies like the IC3, Federal Trade Commission (FTC), or your local consumer protection office.

- Tell people you know: Encourage your friends, family, and neighbors—especially older adults—to learn about deepfake scams. That way, they, too can take steps to protect themselves.

Sources

1. Federal Bureau of Investigation (FBI), Los Angeles Field Office. FBI Releases 2023 Elder Fraud Report with Tech Support Scams Generating the Most Complaints and Investment Scams Proving the Costliest. May 2, 2024. Found on the internet at https://www.fbi.gov/contact-us/field-offices/losangeles/news/fbi-releases-2023-elder-fraud-report-with-tech-support-scams-generating-the-most-complaints-and-investment-scams-proving-the-costliest

2. YouGov. Majorities of Americans are concerned about the spread of AI deepfakes and propaganda. September 12, 2023. Found on the internet at https://today.yougov.com/technology/articles/46058-majorities-americans-are-concerned-about-spread-aiS

3. Federal Bureau of Investigation (FBI), Miami Field Office. FBI Miami Warns of Grandparent Fraud Scheme. March 22, 2023. Found on the internet at https://www.fbi.gov/contact-us/field-offices/miami/news/fbi-miami-warns-of-grandparent-fraud-scheme